Explain Psychoacoustics: The Science of Audio

Psychoacoustics is about what’s under the hood of all things audio. Sound, like the other sensations we experience, is a matter of perception. Just how do we perceive sound? Our bodies have created complex mechanisms that allow us to hear all sounds with a frequency content between 20Hz and 20kHz, but how does this work?

As a musician, having an understanding of psychoacoustics will benefit you massively. Our stereo field, for example, is the digital world’s emulation of psychoacoustic. Panning from left to right, having elements deeper in your mix than others, making sounds seem wider than they are… this is all psychoacoustic. But, how does it work in the real world?

The truth is that you’re already dabbling with psychoacoustic every time you make music. Why do we perceive the sounds of instruments as we do?

With psychoacoustic in your mind, you’ll be able to create more immersive music for your fans because you’ll understand why their ears receive what they do.

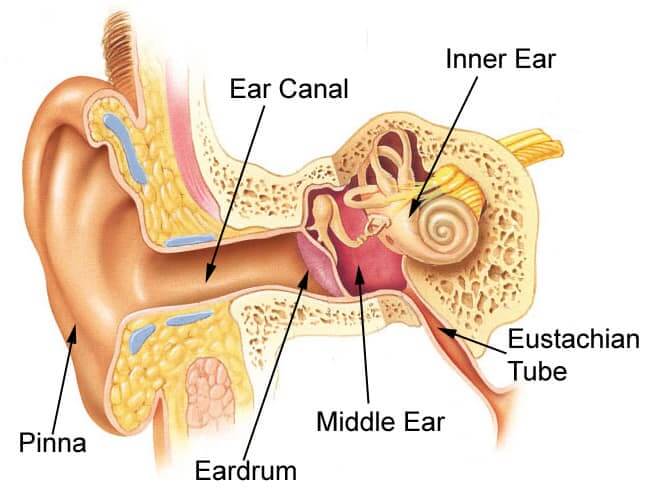

With that said, let’s begin with the ear…

How Does the Ear Work?

There are three parts of the ear that affect our perception of sound. These are the pinna, the eardrum and the cochlea.

The Pinna (Auricle)

The Pinna, also known as the auricle, is the big flap of skin you can actually see. Due to evolution, its shape catches sound waves and funnel them towards the eardrum.

The Ear Drum

The eardrum is a thin membrane that sits at the end of our ear canals. The eardrum acts as a transducer and converts the energy of one form into another. That is, it converts acoustic soundwaves into changes in air pressure. Soundwaves cause the eardrum to vibrate and change the air pressure inside the ear. The vibrations of the eardrum cause three small bones that are attached to the eardrum (ossicles) to begin moving. In turn, the ossicles amplify the sound which then travels through the eustachian tube. This tube equalizes the pressure between the air outside the ear and that within.

Bonus note: the eustachian tube is what causes our ears to pop on plains! When pressure builds up in the middle ear, which is where the 3 ossicle bones and the eustachian tube lives, the eustachian opens up to relieve the pressure. It’s this opening of the tube that causes our ears to pop.

The Cochlea

Finally, we have the cochlea which lives in the inner ear. The cochlea is shaped like a snail shell and it’s full of fluid that moves in response to vibrations that have been received from the ossicles. In response to the moving fluid, thousands of tiny hairs are also set into motion. This movement by the tiny hairs converts the vibrations into electrical signals which are then sent along the auditory nerve to the brain.

If you’ve read our microphone about how microphones work, you might be thinking that ears and microphones aren’t so different. Well, you’d be right. They act in pretty much the same way, but one is organic while the other is mechanic!

The Limitations of Our Ears

This beautiful story of ear mechanics is very cool, but it’s not without its limitations.

We can only hear frequencies between 20Hz – 20kHz, and as we get older the upper limit decreases to 16kHz. We can also damage our hearing if we’re not too careful. Noise-induced hearing loss and tinnitus impact our perception of audio, so if a producer or musician suffers from either of these issues then they must put steps into place to achieve a balanced mix.

Because of how we perceive sound, you may find that applying a high pass filter to frequencies around the 30 Hz mark may brighten your mix. This is because it’s removing low-end information that is harder to hear, and therefore less important to our ears. Be wary, though, because this isn’t always the case.

Psychoacoustics in Practise

Let’s talk about how psychoacoustics shapes the products we buy and our interaction with audio, shall we?

We’ve discussed that we can hear frequencies between 20 Hz & 20 kHz, but what we haven’t mentioned is that our ears aren’t equally sensitive to all of these frequencies. We’re much more sensitive to high and mid frequencies, between 2500Hz–5000 Hz, compared to lower ones.

This information is important when both writing music and designing audio gear. Lossy compression, for example, uses this information to snip away audio information that “we won’t notice” when we play the audio file. It’s psychoacoustics that helps us decide what waveform information we should cut and what we should keep without affecting the listeners’ perception of the audio. If there’s frequency content outside of our range of hearing then we should instantly cut that.

But it’s not just frequency content that comes into consideration with practices such as lossy compression. Any sounds with very low amplitudes, so low that we can’t hear them, our ears just perceive them as silence and so get cut by the lossy algorithm.

There’s also sounds that mask one another. This is where one sound affects our perception of another sound when they’re both present at the same time. When sounds play out too quickly our ears also can’t perceive them, when frequencies that are too close to one another we perceive them as blended together as one, and softer quieter noises that loud noises drown out are all things that wouldn’t make the cut for the file by a lossy compression algorithm.

So, sounds that our ears can’t perceive due to frequency, amplitude, or masking determine what frequencies don’t feature in the lossy file. And the resulting file size can be up to ten times smaller than the original. But the audio itself, in theory, sounds exactly the same.

For more detail on file types, click here!

Psychoacoustics and Music Production

Our ears are more sensitive to mid-high frequencies, we have an old friend to help us fight this bias. The renowned smiley face EQ setting.

With this EQ curve, we scoop the mids out and boost the low and high frequencies. At a low volume level, a broad bass and treble boost will make a mix sound more balanced and powerful.

But when we raise our output level, we’ll hear our dynamic range has been seriously toyed with. We’re noticing this because the frequency response of our ears evens out across the whole spectrum when we hear louder sounds.

But listening to loud music all day isn’t such a good idea for the heal the of our ears. Not only is mixing at high levels or extended periods of time damaging to our ears, but we’ll also perceive all of our elements more upfront than they are.

When you lower the volume back down, you’ll notice that your mix sounds out of control because there seems to be no structure in terms of depth.

Unmasking Instruments

The more and more instruments we add to a mix, the more frequency masking occurs. If two instruments share a similar frequency range, like a kick drum and bass, this becomes very noticeable.

Masking occurs in all mixes and professional records. But too much of it is undesirable, and we have to do something about it.

In order to solve this problem, we can use an EQ to create a unique space in the spectrum for each new instrument in our mix.

Spatial Location

We can take advantage of how our ears tell what direction sound is coming from, which they’re very good at due to the fact that there is two of them.

Width, from left to right, and depth, from front to back, is our stereo field. We can use panning to determine where in the stereo field a particular instrument will sit from left to right.

But we can also make our listeners perceive sounds as wider than they actually are. We do this by utilising the Haas effect, also known as the Precedence effect, by duplicating a sound so there are two identical copies with one panned hard left and one panned hard right.

By delaying only one of the copies by 30 milliseconds our listeners will perceive them as one sound that’s wider than the two individual sounds. You could even experiment with delay times like 40 ms!

Should you apply a shorter delay time such as something between 5–15 ms, you’ll hear something like a metallic sound effect. This happens because the identical signals jump in and out of phase with one other.

This is comb filtering, and we’ve explored it in detail here!

We’re more sensitive to loud sounds with higher frequencies, so they usually sound like they’re at the front of a mix compared to lower frequencies. So to achieve greater depth (front to back space) in your mix, you can roll off high and low frequencies via filtering and push sounds further away in the front to backspace.

This is a digital emulation of how we perceive sounds that are further away from us in nature. Air absorbs the higher and lower frequencies of a sound if the sound source is too far for us to actually hear the sound. Eventually, the sound disappears entirely if we are too far from the sound source.

If you want to practice your psychoacoustic techniques this very minute, you need some samples.

We at Mixxed work with a growing number of sample labels and contributors to provide you with an affordable sample subscription service that’s more accessible than any before.

You’ll have access to our growing catalogue of thousands of loops, one-shots and sound effects that you can browse, download and keep forever for less than $3 a month.

Sign up today to find your sound!